Microsoft’s KOSMOS-2: A revolutionary AI Multimodal Large Language Model

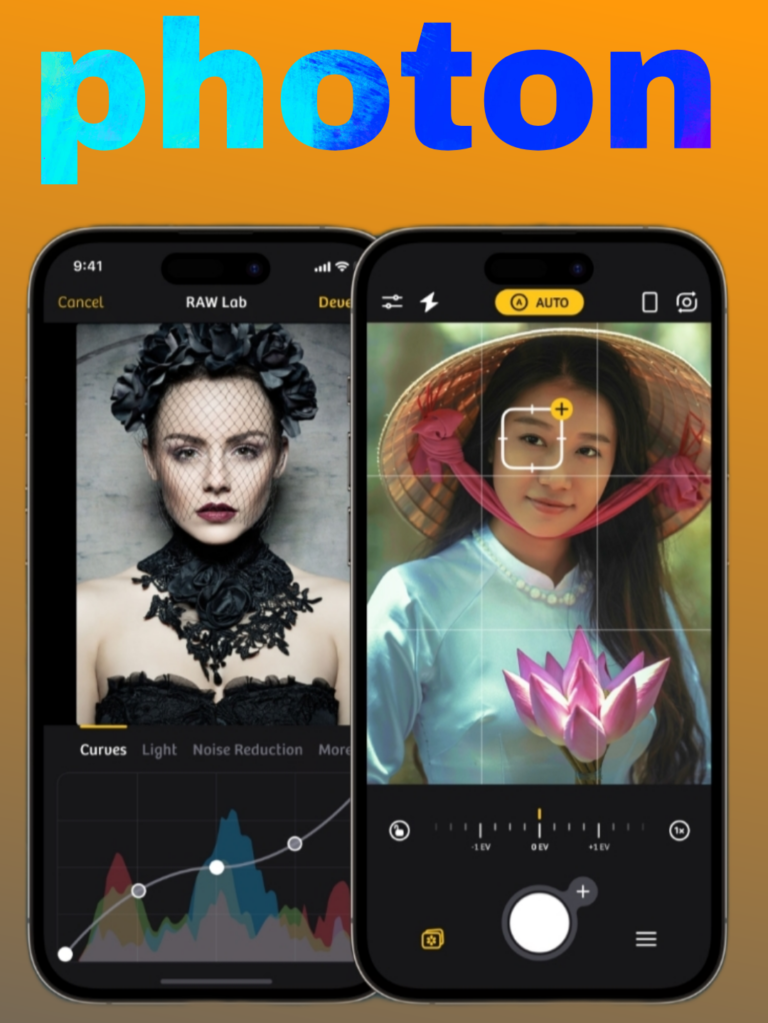

Microsoft has released another Multimodal Large Language Model Kosmos 2 and this is a multimodal large language model that is very interesting. Multimodal Large Language Models are essentially language models that you can basically use with all the modalities other than text. For example, with this large language model which is actually a working product and not just a research paper, you can actually submit images and get back responses .This is very big next step in the field of artificial intelligence. As you know Chat GPT has taken the world by storm. But every person right now who’s working in artificial intelligence is trying to move the needle by looking at image recognition and this is what KOSMOS-2 aims to do.

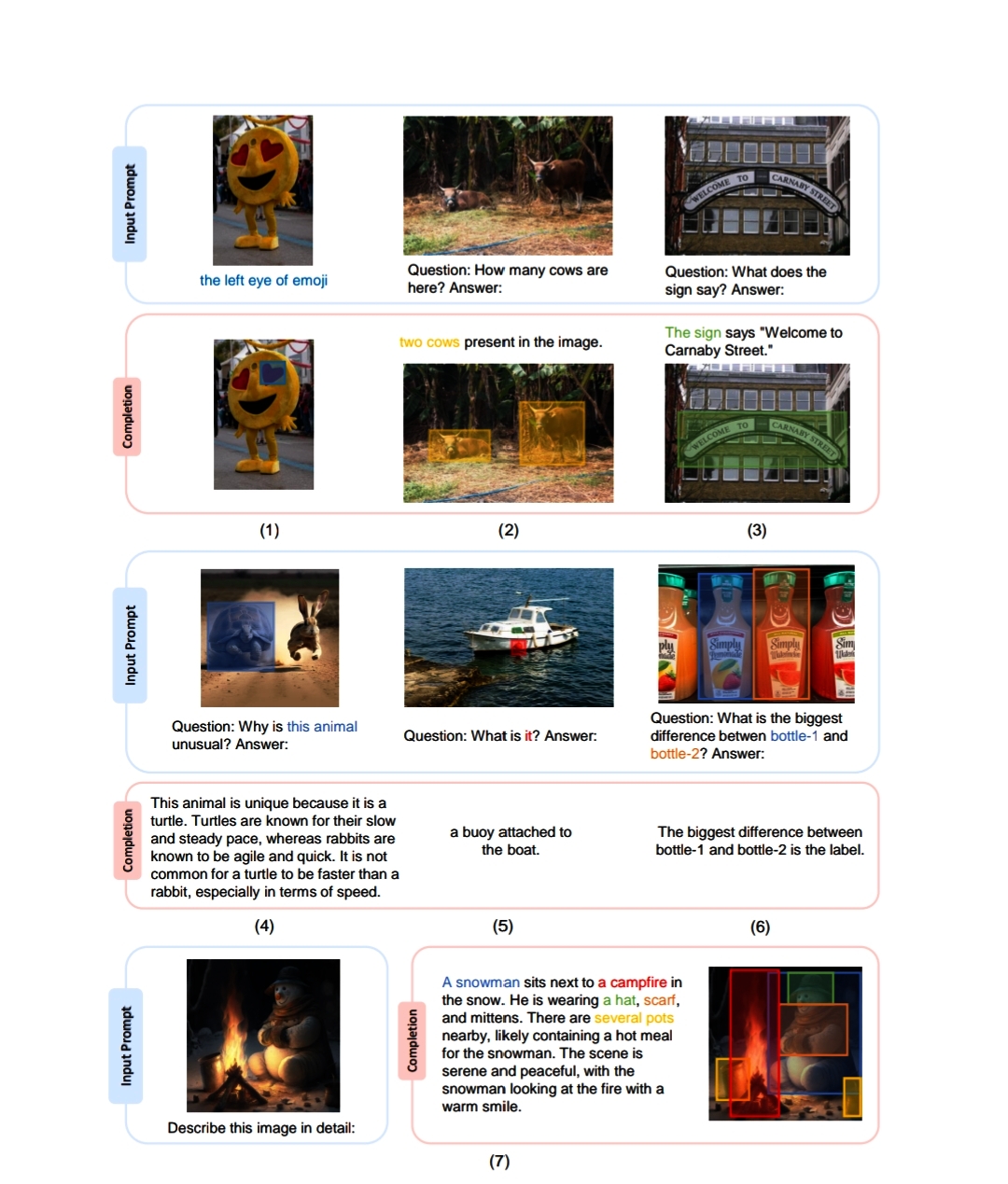

Microsoft is little different because of how they tackle certain tasks and and they have proved it the way they have tackled a Multimodal Large Language Model in their recently released research paper on KOSMOS-2. Microsoft introduce KOSMOS-2 as a Multimodal Large Language Model labeling new capabilities of perceiving object description and grounding text to the visual world. In addition to the existing capabilities of MLLMs (e.g., perceiving general modalities, following instructions, and performing in-context learning), KOSMOS-2 integrates the grounding capability into downstream applications. KOSMOS-2 is evaluated on a wide range of tasks, including:

(i) multimodal grounding,such as referring expression comprehension, and phrase grounding,

(ii) multimodal referring, such as referring expression generation,

(iii) perception-language tasks,and

(iv) language understanding and generation.

For vision-language tasks, the ability to establish a connection can provide a more convenient and efficient interface between humans and AI. The model has the capacity to comprehend the specific area of the image through its geographic coordinates, enabling users to directly indicate the object or region in the picture instead of providing extensive textual explanations as references. Kosmos-2 MLLM is going to be a key step towards Artificial General intelligence and essentially if you don’t know what AGI is , that is an AI system which is capable of doing pretty much any task and its going to be better than humans at literally everything.So, what exactly is KOSMOS-2 and what it can do.

From the sample images of Microsoft research papers they showcased how good it is at recognizing and categorizing images and then off course grounding them in reality. The experimental findings demonstrate that KOSMOS-2 not only excels in the grounding assignments (phrase grounding and comprehension of referring expressions) and referring tasks (generation of referring expressions) but also achieves a strong performance in the language and vision-language tasks evaluated in KOSMOS-1. Sample image visually depicts how the incorporation of the grounding functionality enables KOSMOS-2 to be employed in supplementary downstream tasks, including captioning images based on contextual understanding and answering visually-grounded questions.”